Is CFD on GPUs ready for aerospace and beyond? A deep dive into Fluent’s native GPU solver

Based on a Master’s Thesis by Filip Gustafsson & Gustav Rönn, Chalmers University of Technology (2025)

With insights from Björn Bragée (Technical Account Manager, EDRMedeso) and Anton Persson (Business Developer, EDRMedeso)

As computational demands grow across engineering disciplines, the pressure to shorten simulation times without compromising fidelity is only increasing. In the world of Computational Fluid Dynamics (CFD), this means rethinking not just numerics and turbulence models, but also hardware.

Graphics Processing Units (GPUs), long associated with gaming and AI, have emerged as a game-changer for high-performance CFD. But while industries like automotive have embraced GPU-accelerated solvers for external aerodynamics simulations, adoption in for instance aerospace remains cautious. Why? The physics are more complex, and the stakes due to requirements on design accuracy, certification, and safety are higher.

Gustav and Filip present their findings to the EDRMedeso team

To address this gap, two master’s students at Chalmers University of Technology took on a project in collaboration with GKN Aerospace, Rescale, and EDRMedeso: a rigorous, independent evaluation of Ansys Fluent’s native GPU solver in aerospace-relevant applications.

“We’ve seen some bold performance claims since the LiveGX CFD-solver was released for Fluent a few years ago,” says Björn Bragée, Technical Account Manager at EDRMedeso and initiator of the project. “But we wanted an objective analysis in real-world use cases, something our customers could rely on when making investment decisions.”

The study focused on three representative CFD cases with direct relevance to aerospace:

Each case was simulated using both the Fluent CPU solver and the native GPU solver, introduced in Ansys Fluent 2023R1 and evaluated here using the 2025R1 release. Tests were run on modern CPU (Intel Xeon Gold and Platinum series) and GPU (Nvidia A100 and H100) hardware, with configurations scaled to investigate performance under real-world HPC conditions.

These findings align closely with internal benchmarks conducted by EDRMedeso for its customers.

The speed-up we’ve seen across various applications, especially for rotating machinery and external aerodynamics, is in line with the results from the thesis. This kind of independent confirmation is very valuable for companies that are trying to quantify the return on investing in GPU infrastructure. – Anton Persson, Business Developer at EDRMedeso.

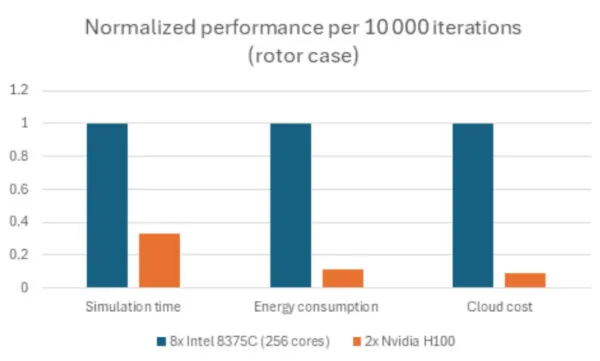

Comparison on simulation time, energy consumption and cloud cost for the rotor case running on 256 cores vs 2 GPUs for 10 000 iterations. Note that the GPU solver needed fewer iterations to converge per time step, hence the performance is even better when you take into account that less iterations are needed to finish the simulation.

While the performance gains are substantial, the GPU solver still lags behind the CPU version in terms of feature support, particularly for advanced or multiphysics problems.

In the TRS case, several adjustments had to be made to boundary conditions to ensure compatibility, including translating cylindrical velocity profiles into Cartesian coordinates. While this required some workarounds, the solver still produced accurate, converged results comparable to both CPU results and experimental data.

However, not all test cases were successful. The supersonic nozzle case turned out to be not directly portable to the GPU solver as it does not yet support 2D calculations. It was possible to run the case on a 90 degree axisymmetric section of the nozzle, but there were no time savings to be had compared to a staying with a two-dimensional approach on CPUs.

As of Fluent 2025 R1, core capabilities like standard RANS and scale resolving turbulence models, heat transfer, combustion chemistry, and VOF for multiphase flows are supported. On the other hand, the density-based solver, transition RANS-models and some advanced boundary condition features and solver settings are examples that remain unavailable or limited.

As of today, porting CPU cases to the GPU solver still requires a bit of hands-on engineering for some applications. But the development pace is rapid. Just a few releases ago, VOF wasn’t available, now it is. Adding functionality to make the GPU solver more generally applicable seems to be a highly prioritized task at Ansys. – Björn Bragée, Technical Account Manager at EDRMedeso

The business case for GPU-based CFD isn’t only about faster simulations – it’s about unlocking new possibilities in engineering workflows. Shorter turnaround times allow teams to:

From a capital investment standpoint, two Nvidia H100 GPUs can deliver equivalent simulation throughput to 14 high-end Intel CPU nodes (448 cores), while consuming far less power and occupying less physical space.

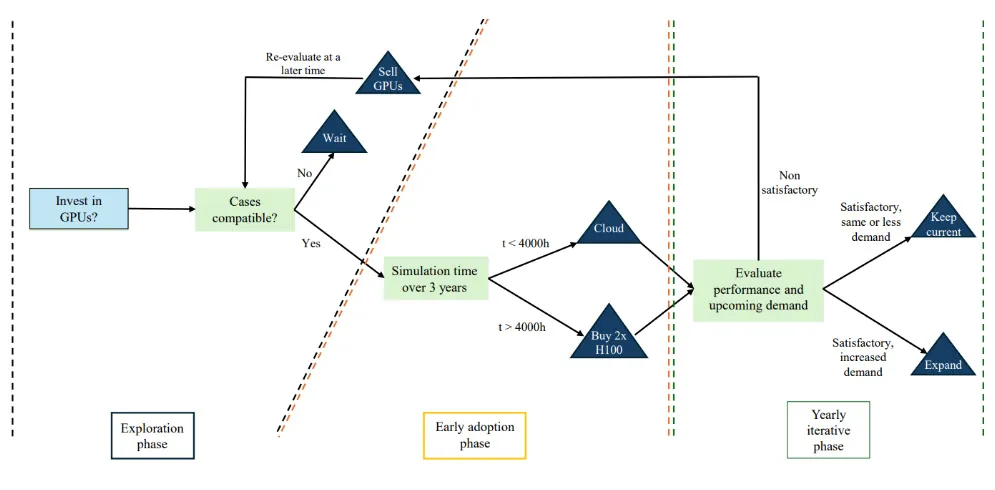

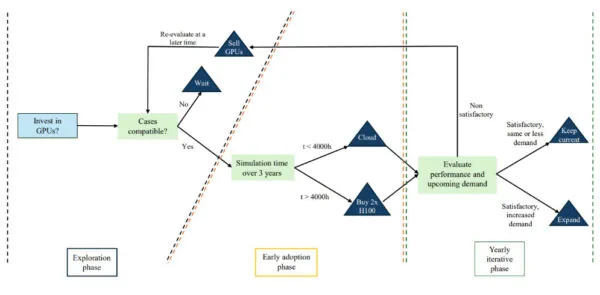

The thesis includes a clear decision-making framework to help engineering managers evaluate if and when to invest in GPU-based HPC clusters or cloud resources. The answer depends largely on simulation profiles, solver support, and strategic goals.

Gustafsson and Rönn’s suggested strategic implementation decision tree

With every new Fluent release, the capabilities of the GPU solver continue to grow, bringing it closer to parity with the CPU version in terms of physics support. For forward-thinking companies, now is the time to evaluate how GPU-powered simulation can fit into their digital engineering strategy.

“This work wasn’t just about benchmarking performance,” Björn Bragée concludes. “It has potential to reshape how engineering teams think about simulation. We hope that this work will act as a stepping stone in the adoption of GPUs for CFD in aerospace and other simulation-intensive sectors, leading to faster, greener, and ultimately, better results.”

Whether you’re running external aerodynamics, internal combustion, HVAC, or turbomachinery, GPU-powered CFD could significantly improve your simulation pipeline.

Contact EDRMedeso’s CFD team to set up a custom performance evaluation

You can read Filip Gustafsson and Gustav Rönn’s thesis here.